PromptMage

Simplify building multi-step LLM applications with version control and testing

Target Audience

- LLM Developers

- AI Researchers

- Technical Teams Building AI Workflows

Hashtags

Overview

PromptMage is a Python framework that helps developers create and manage complex AI workflows using large language models (LLMs). It handles the messy parts of prompt engineering by offering version control, testing tools, and automatic API generation. Teams can collaborate better and deploy reliable LLM applications faster without reinventing infrastructure.

Key Features

Version Control

Track prompt changes and collaborate seamlessly with built-in history

Prompt Playground

Test and compare prompts side-by-side in dedicated interface

Auto-API

Instant FastAPI endpoints for deploying LLM workflows

Evaluation Mode

Validate prompt performance before production deployment

Use Cases

Analyze product reviews from multiple sources

Build custom LLM-powered web apps

Test multiple prompt variations simultaneously

Validate AI model performance metrics

Pros & Cons

Pros

- Built-in version control for prompt evolution tracking

- Local/self-hosted deployment options

- Automatic API generation reduces integration work

- Type-hinting support for error prevention

Cons

- Alpha status means breaking API changes possible

- Requires Python development skills to implement

- Limited third-party integrations mentioned

Frequently Asked Questions

Can I use PromptMage for team collaborations?

Yes, built-in version control helps teams track prompt changes and work together effectively

Is my data stored locally?

Yes, PromptMage is designed as a self-hosted solution for data control

How do I integrate existing LLM models?

The framework provides API endpoints that can connect with your chosen LLM providers

Reviews for PromptMage

Alternatives of PromptMage

Streamline AI prompt development with version control and testing

Streamline AI prompt management with version control and team collaboration

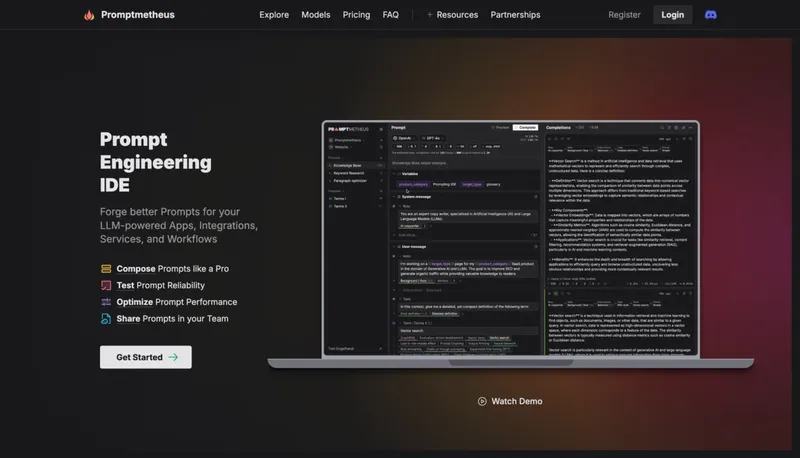

Forge optimized LLM prompts for AI applications and workflows

Streamline prompt engineering and LLM performance management

Streamline collaborative prompt engineering and testing workflows

Automate testing and deployment of large language model prompts

Streamline AI prompt creation and deployment workflows